I recently helped a customer get going with Citrix Cloud (Virtual Apps and Desktops sevice) and whilst in the process of configuring their published apps they asked for a handful of custom icons. A number of their webapps run using Chrome but they wanted to have a custom icon for the application that was more... Continue Reading →

My first FAIL!

There’s nothing quite like that short lived ‘buzz’ after walking out a tech exam with a big phat PASS! Back in January however, I came out of the examination room with what’s best described as a ‘stunned stagger’ in place of the more familiar ‘victory swagger’ 🙂 What beat me? Office 365, 70-347 – Enabling... Continue Reading →

VMUG Scotland… A first for me…

It's funny... there was a period of my career where VMware was a main focus and I'd spend countless hours implementing, supporting and upgrading the platform but I never found myself attending a VMUG. This wasn't through lack of interest by any means... just busy, I guess. Now that I've gone stagnant with the technology... Continue Reading →

How to install the Kaseya VSA Agent on a non-persistent machine

Deploying Kaseya VSA agents to provisioned workstations and servers from a standard image can be a challenge. Using the typical methods result in duplicate GUID's due to the nature of the technology making it impossible to accurately monitor and report on these types of machines. The following procedure has been tried a tested (successfully) numerous times on Citrix... Continue Reading →

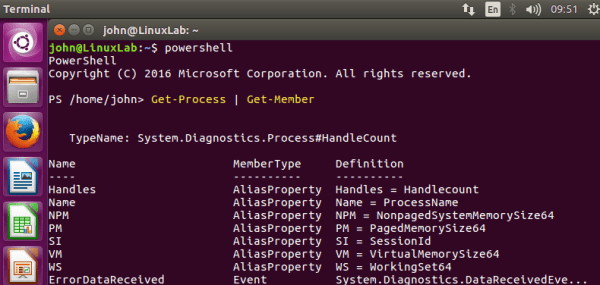

Powershell for all as it goes Open Source

First off, kudos to that 'Technical Fellow' Jeffrey Snover and his team in the Powershell division at Microsoft. Powershell is a management framework for task automation and a must for any serious IT Professional working with the Windows platform. As this blog title suggests, Microsoft open sourced it and as of last week it's readily... Continue Reading →

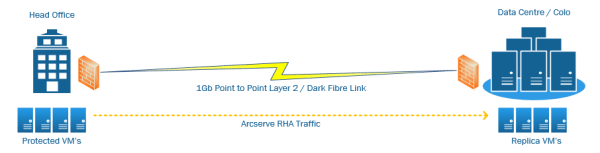

Arcserve RHA Reverse Scenario (Failback) Considerations & Preperation

Arcserve RHA (Replication & High Availability) is one of the core products within the Arcserve suite. At a glance, the Arceserve solution is made up of 3 key components: Arcserve Backup - Tape Backups Arcserve UDP / D2D - Disk to Disk Arcserve RHA - Replication & High Availability Having years of experience operating &... Continue Reading →

Intel, Cloud Telemetry and Jevons Paradox

I recently heard on a tech podcast that Intel were contributing to the open source community via their open telemetry framework called 'Snap'. Snap is designed to collect, process an publish system data through a single API. Here's a quick diagram along with the project goals taken form the Github page that will provide you... Continue Reading →

Citrix PVS Image Process – Best Practices

On completion of the implementation and handover of any technology to an IT department / client, high quality build and configuration documentation is a must. This may seem obvious but I've been in many situations where project time gets squeezed due to budget or to get a deal over the line and a 'day or... Continue Reading →

Seven Deadly… Tools.

I have tried, tested and benefited from a plethora of nifty tools over the years. Some good, some bad... and some, well just down right ugly!

Securing Citrix Netscaler… The basics!

While there is an abundance of best practices and white papers on how to secure your Netscaler, I come across many implementations that are worryingly insecure. Whenever I highlight this with the IT Manager, engineering or the security team they are naturally keen to plug these holes asap. After some digging I normally find it's... Continue Reading →